Building a SVB Q&A chatbot with Next.js and LangChain JS

In this tutorial, I will build a customized AI chatbot using Next.js (React) and LangChain JS. Part of the point of this is to show how easy and fast it is to create something powerful.

First off, why is this even needed when we have a great chatbot in ChatGPT? Well, ChatGPT doesn’t work well with proprietary and custom data. There’s no easy way to load a large amount of custom data, and it doesn’t have any data after 2021, so it needs custom data for it to be knowledgeable about current events.

That’s where LangChain comes in. LangChain launched last year, and the library helps build custom chatbots, agents, and has wrappers to help us with things like prompt templates, chunking (to break up long documents), and more. For a while, you had to use Python with LangChain, but that changed when they launched LangChain JS last month. Now we can easily integrate LangChain into our Next.js apps.

For this bot, I’ve loaded a Wikipedia document about the recent Silicon Valley Bank collapse. So, we can ask the bot questions about the collapse and hopefully get some clarity around the situation. Let’s get started!

First, let’s create our Next.js app:

npx create-next-appLet’s download the new LangChain JS package.

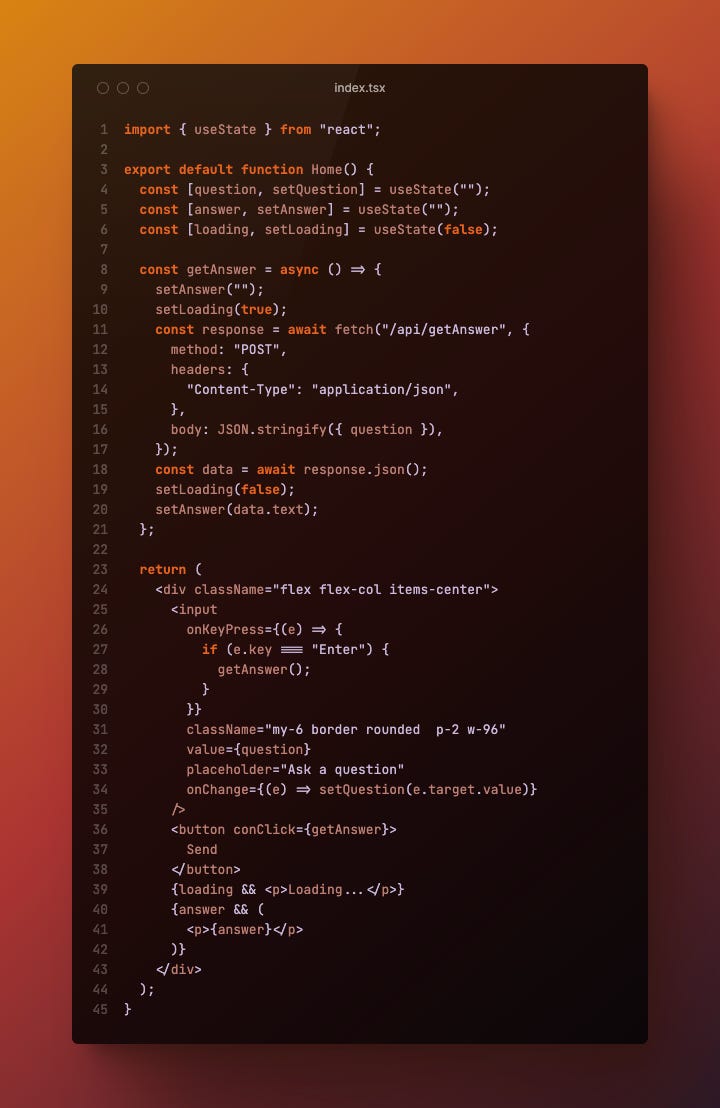

npm i langchain hnswlib-nodeNow, let's build our simple frontend, which is just an input to collect the question, a submit button that will hit our backend to get the answer, and an API call when a user hits “submit.”

Next, let’s prepare our custom data. For this, I just copy/pasted the text from the Wikipedia article and dropped it into a “text.ts” file.

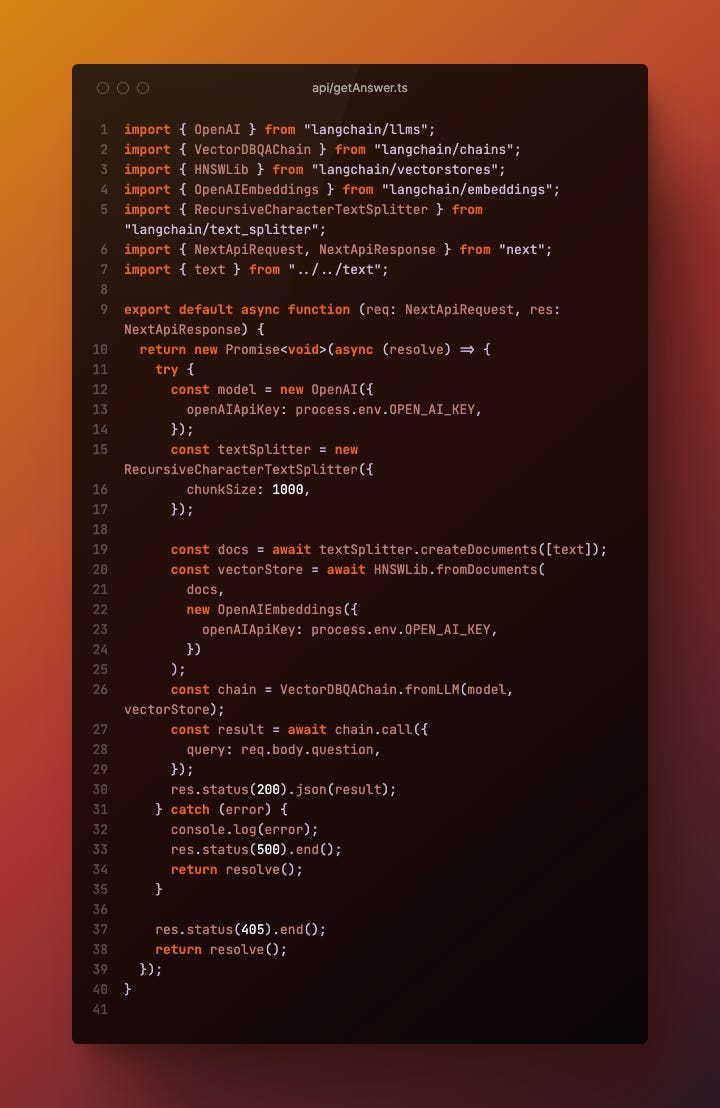

Now let’s work on our “backend.” I use backend in quotes because Next.js gives us serverless functions out of the box, which acts as our backend or API. There are several options we can use from LangChain to create our chatbot. The one I decided to go with is called VectorDBQAChain. This is a great option because it takes care of embeddings for us. Embeddings map our data mathematically so that when a user asks a question, it can quickly find the right answer.

This code may look intimidating, but it’s actually pretty simple. First, on line 14, we are initiating our AI model, which is OpenAI. Then, in lines 17-22, we are preparing our text. First, we get the text from the file, then we split it into chunks using LangChain’s handy RecurringCharacterTextSplitter class, and lastly, we are creating documents out of the chunks using another LangChain utility called createDocuments.

Next, we create our vectorstore, which takes in the documents and the embeddings service of our choosing, which in this case is OpenAI embeddings. This is the part of the code where LangChain is semantically storing all the words in our documents and creating mathematical representations of those words.

Finally, we create a chain, which is the chatbot, and then call that chain with the question so that the chain can look up an answer.

Let’s see if it works. When I type in the question “what caused the SVB collapse?”, we get the answer:

“The collapse of SVB was caused by a bank run and the downgrading of its credit rating by Moody's Investors Service.”

Pretty good! Let’s give it something a bit more specific: “which companies had a lot of money with SVB?”. We get back:

“Companies such as Airbnb, Cisco, Fitbit, Pinterest, Block, Inc., Vox Media, and Mark Cuban reportedly had millions in the bank.”

Let’s try to trick it and ask a question that has nothing to do with SVB. “What happened to Apple?”. We get back: “Apple is not mentioned in this context, so I don't know what happened to them.” Nice.

It’s as simple as that! Using Next.js with Langcahin, I can build a customized AI chatbot in under 30 minutes. Pretty incredible!

Some improvements we can make

Streaming: this will allow us to stream letters and words to the frontend so that users can start to see the result and not have to wait for the full answer.

Keep chat history: This is an important one that I didn’t have the time for, but ideally, we are saving the chat history on the frontend. LangChain is saving it on the backend, which is cool, but our users need to know that it’s on the frontend as well.

This was fun! Here’s the code if you want to check it out: https://github.com/scottschindler/next_q_and_a